Data Observability: Building Resilient and Transparent Data Ecosystems

1. Introduction: The Strategic Importance of Data Observability

In an era where data is the backbone of strategic decision-making, the importance of data observability has never been greater. As data flows through complex systems, across cloud environments, and within distributed networks, ensuring its accuracy, reliability, and availability has become paramount. Without robust observability, data issues can remain undetected, potentially undermining key business initiatives, diminishing stakeholder trust, and incurring compliance risks.

According to Gartner — “By 2026, 50% of enterprises implementing distributed data architectures will have adopted data observability tools to improve visibility over the state of the data landscape, up from less than 20% in 2024.”

Let’s establish a practical definition of Data Observability !

Data Observability refers to the ability to fully understand and monitor the entire lifecycle of data — from ingestion to transformation to consumption — in real time securely with transparency and in a compliant manner.

Data observability provides organizations with end-to-end visibility into their data ecosystems, allowing them to monitor data health, track data lineage, and diagnose issues in real time. This proactive approach transforms data management from a reactive process — where teams scramble to address issues after they impact the business — to a proactive discipline that identifies and resolves data quality concerns before they escalate. It provides deep insights into the quality, performance, and behaviour of data across pipelines and systems. In a modern enterprise, data observability ensures that the data based business decisions are trustworthy, accurate, and timely.

By investing in a data observability framework, companies can ensure that their data remains a strategic asset rather than a liability. Observability enables enterprises to take control of their data ecosystem, reducing operational bottlenecks and providing the transparency needed for high-stakes decision-making. As data ecosystems grow more complex, data observability has become essential not only for operational efficiency but also for fostering trust and resilience within organizations.

Visionary Insight: Companies investing in data observability today are building the foundation for agility, resilience, and data-driven success. Observability transforms data from a potential risk into a powerful asset that supports sustainable growth and operational excellence.

2. The Five Core Pillars of Data Observability

An effective data observability framework rests on five core pillars, each addressing a critical dimension of data health. These pillars collectively provide a holistic view of data, enabling proactive management of data quality, availability, and compliance across all organizational systems.

2.1 Data Freshness, Timeliness, and Availability

Data that is outdated or inaccessible when needed can lead to misguided decisions, lost opportunities, and operational risks. Ensuring data freshness, timeliness, and availability guarantees that decision-makers are working with the most current and accessible information.

Example in Practice: For a retail company, real-time sales and inventory data are crucial to adapt to customer demands. Observability ensures this data is constantly updated, empowering the company to prevent stockouts and optimize supply chain efficiency.

2.2 Data Quality and Integrity

Data quality encompasses the accuracy, completeness, and reliability of data. High-quality data serves as a foundation for confident decision-making, while compromised data erodes trust and leads to costly errors.

Insight from Finance: In the financial sector, even minor data inconsistencies can lead to regulatory penalties. Observability frameworks implement continuous data quality checks, such as schema validation and integrity tests, to ensure that data remains accurate and compliant at every stage.

2.3 Data Volume and Distribution

Monitoring data volume and distribution across systems is essential to prevent bottlenecks, optimize performance, and identify anomalies. Understanding data flow dynamics allows organizations to balance data loads and avoid congestion in critical systems.

Use Case: In IoT and sensor data, high data volumes are generated continuously. Observability helps manage these flows efficiently, ensuring timely data availability for real-time analytics and responsive decision-making.

2.4 Data Schema Consistency

Schema consistency ensures data is structured uniformly, enabling seamless integration across systems. Without consistent schemas, data integration becomes error-prone, leading to issues in analytics and reporting.

Example in Action: In mergers and acquisitions, disparate data systems with varying schemas must be consolidated. Observability tools monitor and enforce schema consistency, reducing the friction of integration and enhancing data accuracy in the newly unified organization.

2.5 Data Lineage and Traceability

Data lineage tracks the origin, transformations, and final destination of data across systems. This traceability is vital for audit readiness, compliance, and building trust among stakeholders, especially in regulated industries.

Case Study: Pharmaceutical companies rely on lineage observability to track data from clinical trials through regulatory submissions, ensuring every data point is traceable and compliant with health regulations. This pillar not only supports governance but also enhances transparency and accountability.

3. Five Critical Features of Data Observability Solutions

To establish effective data observability, organizations need solutions that embody these five critical features. Each feature is designed to address a unique aspect of data health and operational resilience.

3.1 Continuous Monitoring, Assessment, and Detection

Continuous monitoring enables real-time insights into data health, alerting teams to potential issues before they escalate. It ensures uninterrupted oversight of data flows and quality, providing answers to critical questions like, “What went wrong?”

Industry Application: In e-commerce, continuous monitoring identifies abnormal customer behavior patterns, such as sudden spikes in cart abandonment rates. Observability tools can alert teams to investigate these trends in real-time, helping to resolve issues promptly and protect revenue.

3.2 Alert and Triage

Effective observability solutions include intelligent alerting and triage mechanisms that prioritize issues based on severity and impact. Alerts should be targeted, providing actionable information on who needs to be notified and when.

Example: In financial services, data anomalies like duplicate transactions trigger alerts for compliance officers. A well-structured triage system ensures that these alerts are routed to the right teams for immediate action, minimizing compliance risks.

3.3 Root Cause Analysis

Root Cause Analysis (RCA) goes beyond issue detection, identifying underlying causes to prevent recurrence. RCA allows teams to ask “Why did this happen?” and “How can we prevent it?” with clarity.

Practitioner Tip: RCA is especially valuable in complex data pipelines. Observability tools equipped with RCA capabilities can trace errors back to specific transformations or ingestion

CastorDoc Insight: “Pipeline observability is crucial for identifying issues in real-time, maintaining uninterrupted data flow and high-quality data outputs”.

For example, in e-commerce, pipeline observability is essential during peak shopping periods, as it ensures that customer data is updated in real time. This seamless data flow helps prevent out-of-stock issues, optimizes the user experience, and supports accurate real-time analytics. By continuously tracking pipeline health, organizations ensure reliable data access and minimize disruptions.

3.4 Recommendations

Observability solutions should not only identify issues but also provide prescriptive recommendations for resolution. These insights accelerate the remediation process and help maintain data integrity without excessive downtime.

Insight: In healthcare, observability solutions can recommend schema adjustments to align data between clinical systems, ensuring that patient records remain consistent and accurate across departments.

3.5 Resolution, Remediation, and Prevention

Effective observability frameworks support automated remediation and prevention, allowing systems to self-correct common issues and prevent recurrence. This capability enhances reliability, resilience, and operational efficiency.

Case Study: In the telecom industry, automated remediation within observability solutions can adjust for network latency, ensuring that streaming and communication services remain uninterrupted for users.

4. Five Observation Categories

Data observability spans five observation categories, each focused on a distinct aspect of the data ecosystem. Together, these categories enable comprehensive, proactive management of data health across the organization.

4.1 Observe Data / Content

Observing Data Content ensures that the data itself remains accurate, complete, and free of corruption. This involves checking data consistency, format compliance, and detecting potential anomalies. Data-quality-related issues in:

- Completeness

- Accuracy

- Uniqueness

- Timeliness

- Anomalies and outliers

- Acceptable Variance

Example: In manufacturing, content observability validates sensor data to prevent erroneous inputs from affecting production metrics, ensuring that only accurate data influences operational decisions.

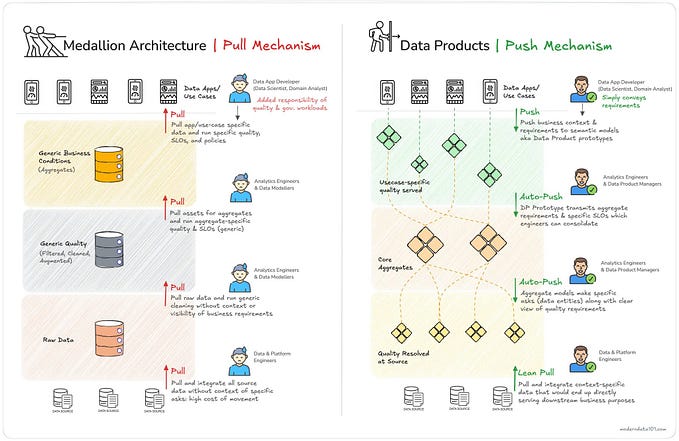

4.2 Observe Data Flow and Pipeline

Observing Data Flow and Pipeline health allows teams to monitor data movement across systems, identify potential bottlenecks, and address disruptions before they impact analytics or operational dashboards.

Use Case: Retailers use pipeline observability to monitor data movement from online transactions to inventory systems, ensuring real-time inventory updates and responsive restocking decisions.

4.3 Observe Infrastructure and Compute

Observing Infrastructure and Compute resources ensures that data systems remain performant and that resources are optimally allocated. This category focuses on preventing bottlenecks, ensuring compute resources are not overtaxed.

Practical Example: In cloud environments, infrastructure observability monitors resource consumption to optimize costs and prevent outages due to resource shortages during peak demand.

Illustration: Data Observability Aims to Identify Possible Drifts Across Data Ecosystem Layers

This visual illustrates the various planes within a data ecosystem where drifts or shifts can occur. Data observability provides insights into potential drifts at each layer — such as code drift in the application plane, configuration drift in the control plane, and schema drift in the data plane. By identifying these deviations early, observability helps prevent potential breakpoints that could disrupt data pipelines, affect data quality, or compromise infrastructure performance.

Effective observability requires monitoring across these layers to maintain alignment and consistency throughout the data lifecycle, ensuring that data remains reliable, resilient, and fit for purpose.

4.4 Observe User, Usage & Consumption

Observing User, Usage & Consumption patterns allows organizations to track who is accessing data, how frequently, and for what purposes. This enables better governance, access control, and resource allocation.

Case Study: A global bank uses usage observability to detect unusual access patterns, enhancing security and ensuring compliance with strict data governance standards.

4.5 Observe Financial Cost, Impact, and Optimization

Observing Financial Cost, Impact, and Optimization ensures that data observability does not become a financial burden. By tracking the costs associated with data processing and storage, organizations can optimize expenses.

5.Overcoming Challenges in Data Observability Implementation

Despite its transformative potential, implementing data observability in complex enterprise environments presents several challenges. Addressing these obstacles is essential for achieving a resilient, transparent data ecosystem.

5.1 Multi-Cloud and Hybrid Complexities

In today’s enterprise landscape, data is scattered across on-premises, multi-cloud, and hybrid environments. Each platform may have its own monitoring tools, standards, and operational silos, which creates visibility gaps and inconsistencies. These complexities can hinder a unified approach to data observability.

Solution Approach: To overcome this, organizations should invest in a centralized observability platform that integrates with multiple data sources across different environments. By consolidating observability metrics into a single interface, teams gain a unified view of data health and can apply consistent quality standards regardless of where data resides.

Example in Practice: A global e-commerce company operating on AWS, Azure, and on-premises systems implemented a centralized observability tool to monitor customer and transaction data across all platforms. This unified view allowed them to maintain data consistency and quality during peak shopping seasons, enhancing customer experience and operational efficiency.

5.2 Breaking Down Data Silos

Data silos emerge naturally as organizations grow, with different teams or departments developing unique data management approaches. These silos lead to fragmented observability, limiting cross-functional visibility and creating inconsistencies in data quality, which hampers strategic decision-making.

Solution Approach: Breaking down silos requires standardizing observability practices across departments and fostering collaboration between data, IT, and business teams. Implementing cross-functional observability tools can ensure data remains consistent and accessible, while also aligning data quality initiatives with organizational goals.

Strategic Insight: By unifying observability practices, organizations ensure that data is treated as a shared, reliable resource across departments. This creates a cohesive view of data quality that supports both operational efficiency and strategic alignment.

5.3 Resource and Cost Constraints

Achieving robust data observability requires significant investment in technology, infrastructure, and skilled personnel. For many organizations, particularly those with large data ecosystems, these costs can be a major constraint, especially if observability initiatives are not phased or prioritized effectively.

Solution Approach: Start with a phased observability implementation that prioritizes high-impact data sources. By focusing initially on critical data flows or customer-facing systems, organizations can demonstrate early wins and justify further investment in observability infrastructure.

Case Study: A financial services provider prioritized observability for its compliance and risk management data, where accuracy and timeliness were critical. This phased approach allowed them to address regulatory requirements efficiently, proving the value of observability to stakeholders and paving the way for broader adoption across other business units.

6. Benefits of Data Observability: A Strategic Advantage

Effective data observability not only improves data quality but also enhances operational efficiency, compliance, and decision-making, providing a strategic advantage across the enterprise.

6.1 Enhanced Data Quality and Trust

Data observability ensures that data is accurate, reliable, and aligned with business requirements. By continuously monitoring and validating data quality, organizations can prevent costly errors and increase confidence in data-driven decisions.

Example: A multinational retailer improved its data observability framework to continuously monitor product inventory data across stores. This real-time visibility into inventory discrepancies enabled faster restocking and reduced stockouts, enhancing both customer satisfaction and operational efficiency.

Why It Matters: Trustworthy data is the foundation for data-driven cultures. When stakeholders trust the accuracy and integrity of data, they’re more likely to rely on it for strategic decision-making, which ultimately leads to more informed and successful business outcomes.

Illustration: Consequences of Delayed Issue Detection in Data Observability

The graph underscores the importance of early issue detection within data observability frameworks. As shown, when issues are detected early by data engineers, the impact is minimized, limited to operational delays or minor corrections. However, as the detection of data issues is delayed and discovered by business stakeholders, external customers, or even the general public, the consequences escalate — from lost time and trust to lost revenue and reputational damage. This reinforces the value of proactive data observability for preserving both customer trust and business integrity.

By enabling early detection and resolution of issues, data observability frameworks prevent these risks, supporting operational efficiency, safeguarding revenue, and building trust.

6.2 Operational Efficiency and Resilience

Data observability frameworks facilitate operational efficiency by enabling real-time issue detection and automated remediation. This reduces downtime, minimizes manual intervention, and ensures systems remain reliable under high demand.

Real-World Scenario: In telecommunications, real-time observability of network data helps a provider detect latency spikes and proactively resolve them, maintaining uninterrupted service for users. This proactive monitoring led to a 30% reduction in customer complaints and a measurable improvement in service uptime.

Why It Matters: Operational resilience is essential for maintaining a competitive edge in fast-paced markets. Observability allows organizations to anticipate and address potential disruptions, ensuring consistent, high-quality service delivery.

6.3 Compliance and Governance Assurance

Data observability supports compliance by tracking data lineage, enforcing quality standards, and ensuring that data transformations meet regulatory requirements. Observability also provides a clear audit trail, demonstrating data integrity to regulators and stakeholders.

Industry Insight: In healthcare, data observability frameworks help organizations monitor patient data across various systems, ensuring compliance with HIPAA regulations. Observability tools provide real-time alerts for any deviations from data security standards, mitigating compliance risks.

Why It Matters: Compliance breaches can be costly and damage an organization’s reputation. With robust observability, organizations reduce these risks, maintain regulatory alignment, and foster trust among customers and regulators.

7. Twelve Mantras for Implementing Data Observability

To fully harness the power of data observability, organizations must adopt a strategic approach to its implementation. Event-based, reactive monitoring is no longer enough to manage complex, multi-cloud data ecosystems effectively. The following 12 mantras provide a structured roadmap to transform observability from a technical capability into a core element of an organization’s data strategy, driving resilience, compliance, and business alignment.

7.1 Develop a Data Dictionary / Business Glossary

A data dictionary or business glossary creates a standardized language for data across the organization. By defining key data terms, metrics, and values, this resource ensures consistent understanding across departments.

Implementation Tips:

- Centralize Definitions: Define data elements, acronyms, and metrics relevant to your organization, covering all departments.

- Encourage Contributions and Updates: Allow team members to contribute to the glossary and update it regularly, ensuring it remains relevant as data practices evolve.

- Integrate with Training Programs: Use the glossary in data literacy programs to reinforce shared understanding.

Strategic Insight: A comprehensive data dictionary reduces confusion, enhances cross-departmental collaboration, and supports a data-driven culture, allowing teams to use data confidently and consistently.

7.2 Implement Data Quality and Data Quality Monitoring

Data quality is foundational to trust and usability in data-driven enterprises. Data Quality Monitoring involves continuous checks to ensure data accuracy, completeness, and reliability.

Key Components:

- Data Validation and Profiling: Ensure data adheres to schema, type, and value constraints. Profiling identifies distributions, outliers, and missing values.

- Real-Time Data Quality Checks: Set up automated, real-time checks to catch issues like null values or duplicates before they impact downstream systems.

- Rule-Based Alerts and Generative AI for Rule Creation: Create customizable rules for data quality with alerts, and leverage Generative AI to auto-generate and refine these rules as data evolves.

Example in Practice: A global retailer uses automated quality checks on inventory data, ensuring stock levels reflect real-time changes, thereby optimizing stock management and reducing lost sales.

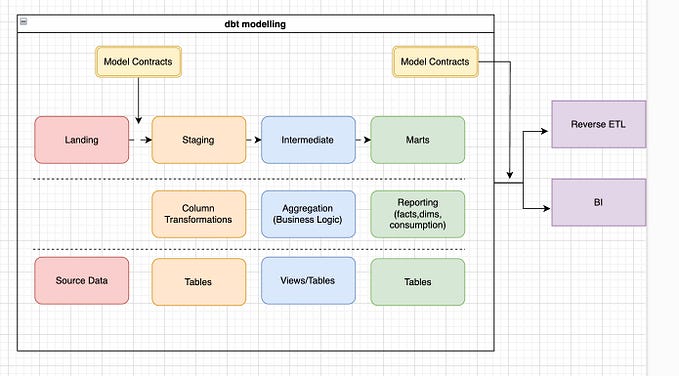

7.3 Implement End-to-End Data Lineage and Metadata Management

Data lineage provides visibility into the data’s journey across systems, from source to consumption. Metadata management adds context, detailing data origins, transformations, and usage.

Best Practices:

- Map Data Flows and Dependencies: Document how data moves across systems, capturing all transformations, dependencies, and final destinations.

- Capture and Manage Metadata: Ensure that each step in the data lifecycle captures relevant metadata, aiding in traceability and compliance.

- Automate Updates to Lineage Information: Use automated tools to update lineage documentation dynamically, keeping pace with data pipeline changes.

Strategic Insight: By establishing clear lineage, organizations enhance transparency and trust in data, making it easier to troubleshoot issues, validate data accuracy, and ensure compliance with regulatory requirements.

7.4 Establish a Centralized Observability Framework

A centralized observability framework consolidates monitoring, enabling a unified view of data health, quality, and dependencies across systems. This unified approach simplifies tracking data issues and provides a single source of truth.

Steps to Implement:

- Integrate Monitoring Tools Across Platforms: Connect observability metrics from various systems to a central dashboard.

- Enable Root Cause Analysis (RCA): Automate RCA to quickly identify the source of data issues, reducing time spent on manual troubleshooting.

- Provide Prescriptive Recommendations for Fixes: Ensure the framework offers actionable steps to resolve detected issues, accelerating remediation.

Example in Action: A financial services company implemented a centralized observability framework, enabling real-time visibility across multi-cloud environments, ensuring data quality and reducing downtime.

7.5 Leverage AI to Automate Anomaly Detection

As data volumes grow, AI-driven anomaly detection is crucial for identifying quality issues in real-time. AI models detect patterns and deviations that indicate data issues, allowing proactive responses.

Core Elements:

- Machine Learning-Based Anomaly Detection: Utilize machine learning to detect unusual patterns and trends automatically.

- Define Customizable Detection Rules: Establish business-specific thresholds and rules to identify anomalies relevant to organizational goals.

- Automated Alerts for Rapid Response: Configure alerts for detected anomalies, notifying relevant teams immediately.

Example in Practice: Retailers use AI-based anomaly detection to monitor seasonal sales data, ensuring inventory levels and supply chains are adjusted for demand surges.

Strategic Insight: AI-powered observability helps organizations transition from reactive to predictive data quality management, allowing for faster issue resolution and enhanced operational efficiency.

7.6 Embed Observability in CI/CD and DataOps

Embedding observability into CI/CD (Continuous Integration/Continuous Deployment) and DataOps workflows ensures continuous data validation and quality checks, reducing risks during development and deployment.

Implementation Tips:

- Incorporate Quality Gates in Pipelines: Establish checkpoints in CI/CD pipelines for data validation before advancing to production.

- Automate Testing and Validation in DataOps: Set up automated data tests and validations as part of the DataOps process, reducing manual oversight.

- Enable Real-Time Issue Detection: Embed observability to detect and resolve issues during early stages of the data lifecycle, supporting agile practices.

Practitioner Tip : Good Data Observability solution needs a strong foundation for “Data Pipeline Observability”

Data Pipeline Observability

Current data management initiatives focus on data quality but fail to account for data pipelines, infrastructure, users and financial impact, all of which affect the perceived value of their efforts. As data engineers continue to grapple with securing data pipelines and accounting for drift, these uncoordinated efforts only increase the friction between the business and IT. Data pipelines serve as the arteries of modern data ecosystems, carrying information from sources to destinations across various applications. Data Pipeline Observability monitors key metrics within these pipelines — such as latency, throughput, and error rates — to ensure that data flows consistently and without interruption.

.

7.7 Implement Data Security, Compliance, and Transparency

Ensuring data security, compliance, and transparency within observability frameworks is crucial, especially in highly regulated industries.

Core Security Components:

- Data Masking and Encryption: Protect sensitive data to prevent unauthorized access and mitigate compliance risks.

- Access and Usage Monitoring: Track data access patterns to detect unauthorized activities and enforce data governance policies.

- Compliance Reporting: Automate compliance reporting for regulations like GDPR and CCPA, streamlining audit readiness.

Strategic Insight: Integrating security and compliance within observability frameworks fosters customer trust, reduces regulatory risks, and protects sensitive data.

7.8 Performance Monitoring

Performance observability ensures that data remains accessible and systems operate efficiently, particularly in customer-facing applications.

Implementation Steps:

- Track Latency and Availability: Monitor latency, data processing times, and system uptime to ensure reliable performance.

- Set Performance Benchmarks and SLAs: Define performance expectations and track compliance with Service Level Agreements (SLAs).

- Identify and Address Bottlenecks Proactively: Use observability data to identify and resolve performance issues before they impact end-users.

Example: Financial institutions use performance observability to minimize transaction latency, ensuring timely processing for high-frequency trading systems.

7.9 Audit Third-Party Datasets for Transparency, Ethics, and Reliability

Auditing third-party datasets is essential for ensuring external data aligns with internal quality, ethical, and transparency standards.

Best Practices:

- Establish Data Quality Standards: Define standards for data accuracy, consistency, and reliability, and require third-party providers to meet them.

- Conduct Routine Quality Audits: Periodically review third-party data for quality and relevance to ensure compliance with organizational standards.

- Maintain Transparency with Data Sources: Ensure third-party data sources are transparent and traceable, aligning with regulatory and ethical requirements.

Strategic Value: Third-party data audits protect against risks associated with external data sources, ensuring that decisions are based on reliable and compliant data.

7.10 Foster Cross-Departmental Collaboration

Cross-departmental collaboration is critical to effective observability. Aligning data, IT, and business teams ensures consistent practices and supports organizational goals.

Actionable Steps:

- Conduct Joint Training Sessions: Educate teams on data observability’s role in supporting organizational goals.

- Use Shared Observability Tools: Implement shared tools that allow departments to access observability metrics relevant to their needs.

- Align Observability Goals with Business Objectives: Ensure observability initiatives support overarching business goals, fostering accountability across teams.

Strategic Insight: Collaborative observability practices strengthen data initiatives by promoting shared responsibility and aligning efforts with business objectives.

7.11 Implement Automated Remediation and Prevention

Automated remediation enables self-healing in data pipelines, reducing downtime and supporting continuous data quality improvement.

Key Components:

- Workflow Automation for Issue Resolution: Automate responses to common data quality issues, minimizing manual intervention.

- Integrate Remediation with CI/CD Pipelines: Ensure data validation and quality checks are part of CI/CD pipelines, resolving issues early.

- Develop Self-Healing Pipelines: Use machine learning to create self-healing capabilities that automatically address common data anomalies.

Example in Action: An IoT company uses self-healing pipelines to correct errors in sensor data, ensuring clean, actionable data flows into their analytics platforms.

7.12 Implement Data Literacy and Digital Fluency

Data literacy empowers all employees to understand, interpret, and act on data responsibly. This competency is crucial for a data-driven organization.

Implementation Focus:

- Data Literacy Training Programs: Develop courses covering data basics, analytics interpretation, and governance principles.

- Align Literacy Programs with Business Goals: Tailor training to support specific business objectives, making data literacy relevant to each department.

- Promote Digital Fluency: Equip employees with the skills to use data tools and observability platforms, empowering them to leverage insights effectively.

Strategic Insight: A data-literate workforce is essential for fostering a data-driven culture, strengthening decision-making, and enhancing the organization’s ability to act on observability insights.

8. Future Trends in Data Observability

As data observability continues to evolve, emerging trends like AI, automation, and enhanced data governance are elevating its role from a reactive monitoring tool to a proactive, strategic asset. These innovations are enabling organizations to manage data proactively, ensuring compliance, enhancing agility, and bolstering resilience in a complex, dynamic data environment.

According to Gartner’s Chief Data and Analytics Officer Agenda Survey for 2024, 22% of respondents reported that they have already implemented data observability tools, with another 38% planning pilots or deployments within the next year. Within two years, 65% of D&A leaders expect data observability to become a core element of their data strategy. This rising adoption is largely driven by the demand for organizations to leverage emerging technologies like generative AI (GenAI), where robust data quality and observability are essential.

As Gartner’s 2024 CIO and Technology Executive Survey further highlights, 34% of leaders plan to deploy GenAI in 2024, with GenAI ranking as the top emerging technology slated for adoption. This underscores observability’s importance in enabling data reliability and integrity as organizations adopt advanced AI tools. Below are key trends transforming data observability into a powerful enabler of operational excellence and strategic growth.

8.1. AI and Automation: Moving Toward Predictive Observability

AI and automation are rapidly advancing data observability from reactive monitoring to predictive insights. By leveraging machine learning, predictive observability can anticipate issues before they impact operations, enabling proactive interventions.

Strategic Impact:

- Pattern Recognition and Early Intervention: AI-driven observability tools analyze historical and real-time data to identify patterns, forecast potential issues, and prioritize areas with high business impact.

- Operational Efficiency and Faster Resolution: AI-powered observability reduces time to resolution by automating root cause analysis and suggesting prescriptive actions, enabling data teams to focus on high-value tasks.

Visionary Insight: “AI-driven observability transforms monitoring from reactive to predictive, allowing teams to proactively ensure data quality and availability.”

Example in Practice: A large retail organization uses AI observability to predict inventory needs based on consumer behaviour trends, enabling timely stock adjustments during peak seasons. In the financial sector, institutions leverage AI-based anomaly detection to spot irregularities in transaction data, minimizing fraud risk and enhancing customer trust.

8.2. Enhanced Data Governance: Automated Compliance for Evolving Standards

With ever-evolving data privacy and security regulations, observability has become essential for effective data governance. By incorporating automated compliance alerts and lineage tracking, observability ensures adherence to regulatory requirements while enhancing transparency.

Core Benefits:

- Real-Time Compliance Monitoring: Automated alerts notify teams of potential governance issues, enabling timely intervention and reducing regulatory risk.

- Lineage and Traceability for Accountability: Observability platforms that track data lineage in real-time make it easier for organizations to demonstrate compliance, especially in heavily regulated sectors like healthcare and finance, where standards like GDPR, CCPA, and HIPAA require stringent data controls.

Decube Perspective: “Observability with automated compliance alerts helps organizations ensure data quality while aligning with regulatory standards.”

Example in Action: A healthcare provider employs observability to monitor data access, ensuring patient information complies with HIPAA guidelines. This proactive approach not only safeguards sensitive data but also reinforces stakeholder trust by maintaining transparency and compliance.

8.3. Visionary Insight: Leading with AI-Driven Observability

Organizations that embrace AI-driven observability will gain a competitive edge in agility, compliance, and resilience. Predictive insights and automated governance tools enable them to adapt swiftly to changes in data and regulations, outpacing competitors who rely on traditional, reactive monitoring approaches.

Visionary Insight: “Organizations leveraging AI-driven observability will lead in agility and compliance, meeting rising expectations for data quality and transparency.”

Strategic Advantage: Companies that implement AI-driven observability frameworks can navigate complex data ecosystems more effectively, ensuring that data remains a strategic asset rather than a risk. By staying ahead in observability technology, organizations strengthen their data management capabilities, supporting sustainable growth and operational resilience.

9. Conclusion: Data Observability as a Strategic Advantage

Data observability is more than a technical tool — it is a strategic enabler that ensures data quality, transparency, and trust. In complex data environments, robust observability is essential for organizations looking to drive operational efficiency, ensure compliance, and make data-driven decisions with confidence.

Reflective Summary: Observability shifts data management from reactive troubleshooting to proactive stewardship, enabling organizations to identify, resolve, and prevent issues in real time. This proactive approach builds resilience, positioning data as a reliable foundation for innovation and strategic initiatives.

Strategic Call to Action

Data observability is now essential for maintaining reliable data pipelines, supporting advanced analytics, and enabling emerging AI and GenAI applications. Leaders should assess their current observability frameworks and prioritize key enhancements in areas such as:

- Pipeline Observability: Ensuring seamless data flow across ecosystems to prevent bottlenecks.

- Centralized Monitoring: Creating a unified, consistent view of data quality across the organization.

- AI-Driven Insights: Implementing predictive monitoring for faster issue resolution and continuous improvement.

A well-designed observability framework safeguards data quality, enhances agility, and aligns with evolving business needs. Leaders are encouraged to foster cross-departmental collaboration, leverage automation, and emphasize security, building a comprehensive observability strategy that supports long-term success.

Final Thought: Observability should be viewed not only as a technical capability but as a strategic investment in data integrity, operational agility, and organizational growth.

Final Quote: “Investing in data observability empowers organizations to manage data assets proactively, supporting resilience, compliance, and strategic growth”, states a thought leader in data strategy.